Explore the Data

example2.RmdThe following Tutorial aims at evaluating the single models within the ARTMO Database. We provide useful plots and functions for analyzing the model performance with the Format genearated with the pervious MySQL access tutorial.

Essentials

In a second step we analyze the Database generated with the previous Tutorial. For demonstration purposes we used a test-rds stat file containg one small Database with LUT. For detecting the model performance we have to tidy the data again in order to have all the Statistics combined in a unique column. This is done with the formatTidy function. Important is that Each Type of Analysis is evaluated seperately (MLR, VI, Inversion).

stat<-readRDS("./data/demo.rds") %>% filter(!map_lgl(Results, is.null))

stat.td <- formatARTMO(stat)Analyze Database

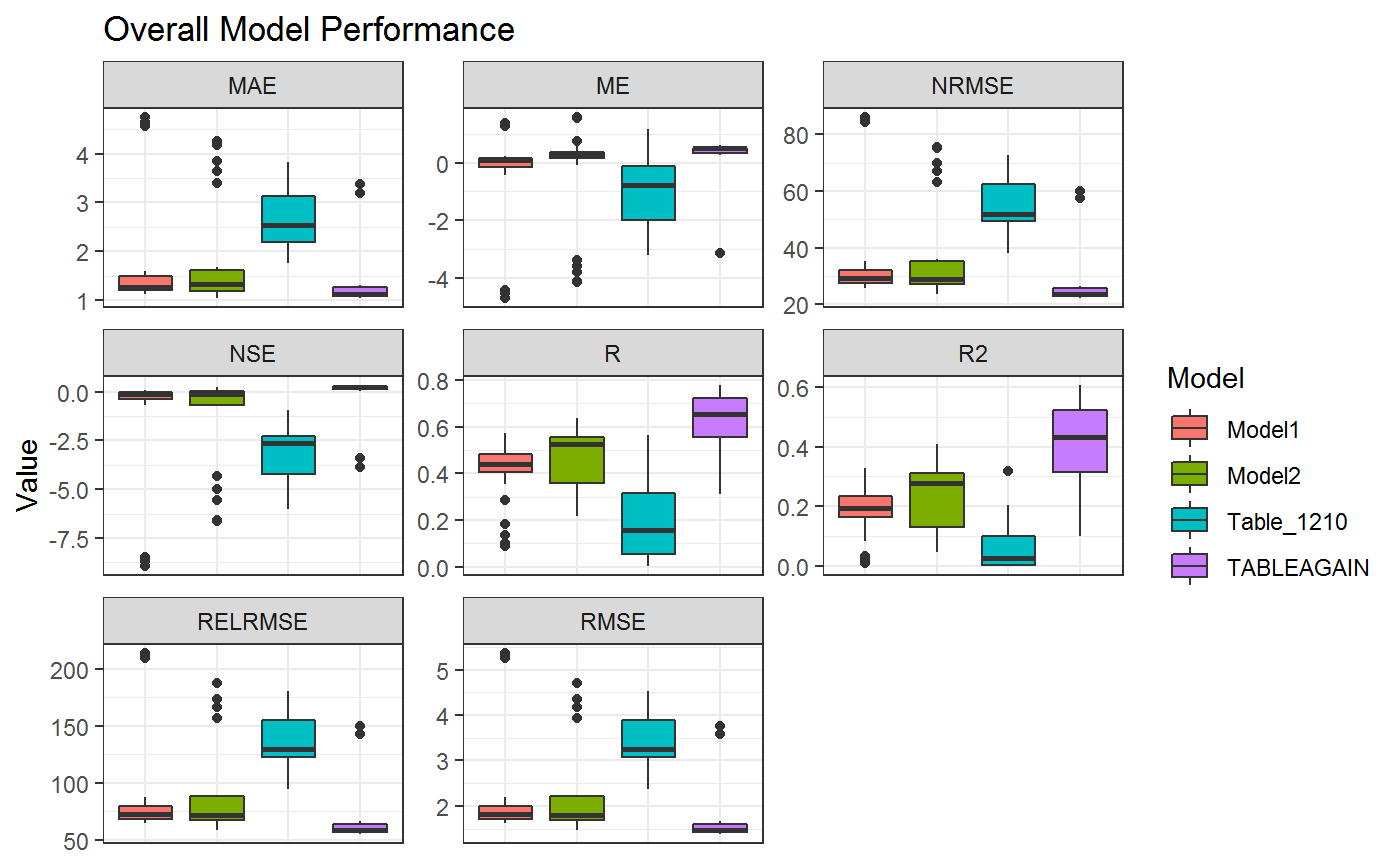

Model Performance

First of all the extracted and tidy data is stored for immediate analysis of the whole database. This section allows to assess the Model Performance independently for each Model stored within a Database. The overall performace can e visualized using:

ggplot(stat.td,aes(Model,Value,fill=Model))+

theme_bw()+

geom_boxplot()+

theme(axis.text.x = element_text(angle = 90, hjust = 1))+

facet_wrap(.~Statistic,scales = "free")+

ggtitle("Overall Model Performance")+

theme(axis.title.x=element_blank(),

axis.text.x=element_blank(),

axis.ticks.x=element_blank())

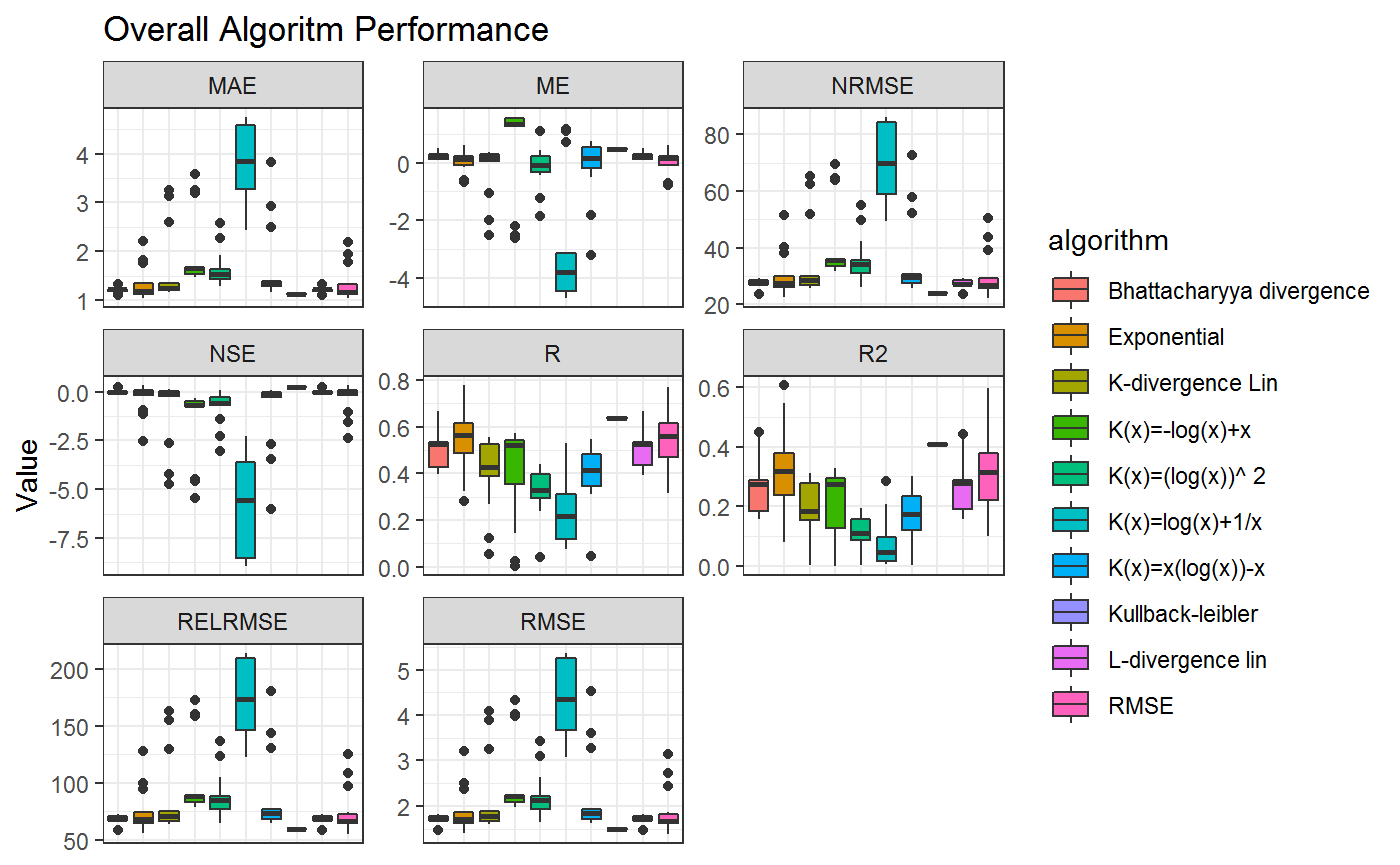

Algorithm Performance

This gives an overview on how the overall Statistics are with respect to the Model. If you want to analyze the Algorithms within each model the same can be applied to the algorithm instead of the model column

ggplot(stat.td,aes(algorithm,Value,fill=algorithm))+

theme_bw()+

geom_boxplot()+

theme(axis.text.x = element_text(angle = 90, hjust = 1))+

facet_wrap(.~Statistic,scales = "free")+

ggtitle("Overall Algoritm Performance")+

theme(axis.title.x=element_blank(),

axis.text.x=element_blank(),

axis.ticks.x=element_blank())

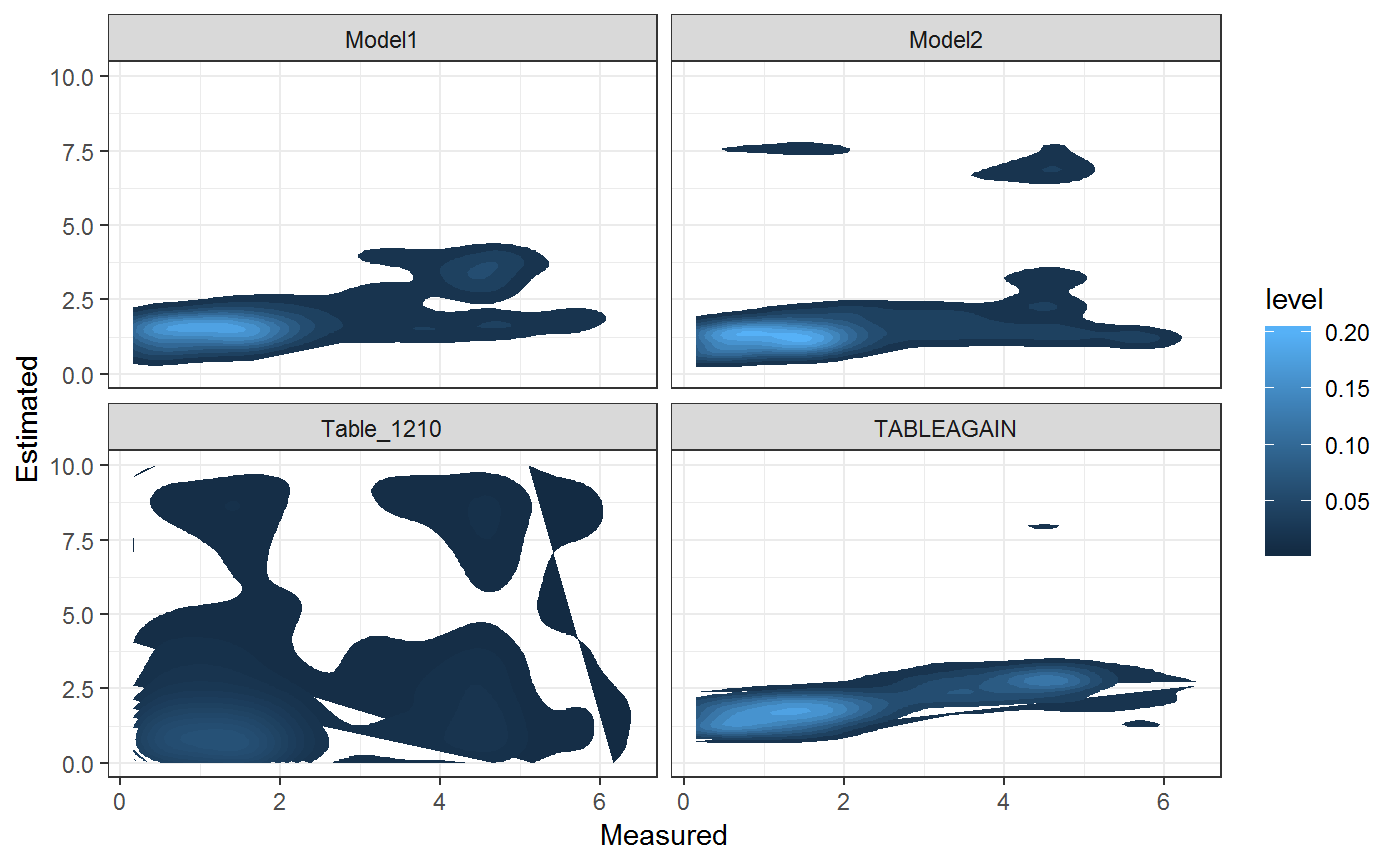

Estimation

Furthermore the Data Structure gives us the possibility to analyze the database as a whole and not just single Results

library("tidyr")

parameters<-c("algorithm","noise")

statistics<-"R2"

stat.model.all<-formatARTMO(jtable=stat,standard=F,parameters=parameters,statistics=statistics) %>%

unnest

ggplot(stat.model.all,aes(Measured,Estimated))+

stat_density_2d(aes(fill = ..level..), geom = "polygon")+

facet_wrap(.~Model)+

theme_bw()

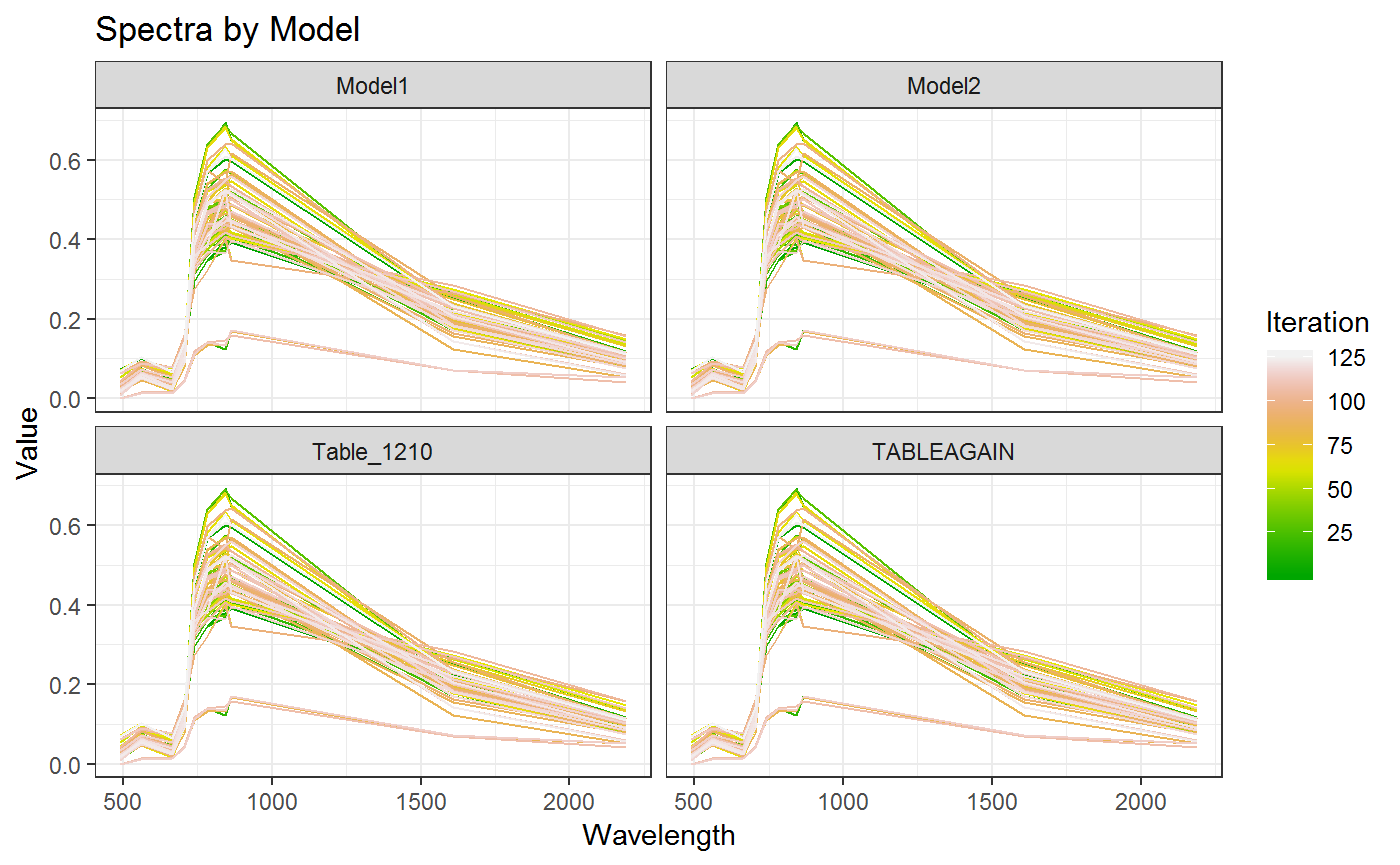

Nested Columns (Spectra)

In this way each of the colums within the Data Frame can be addressed. In order to address the nested ones (e.g. Spectra) we have to reduce the dimensionality and unnest them. Here it is convenient to select the Columns of interest for the plot. Here we selected Model and Spectra for gerating a plot taking into account each single Spectrum for the ARTMO LUT. Here all Spectra are the same since they were generated with the same validation table.

stat.td.spec<- stat %>%

select(Model,Spectra) %>%

unique %>%

filter(!map_lgl(Spectra, is.null)) %>%

unnest

g3<-ggplot(stat.td.spec,aes(Wavelength,Value,group=Iteration,color=Iteration))+

theme_bw()+

geom_line()+

ggtitle("Spectra by Model")+

facet_wrap(.~Model)+

scale_color_gradientn(colors=terrain.colors(200))

print(g3)

Parametrization

Often the most important fact about evaluating the Table is to understand basic parametrization as well as the applied algorithms. Dendrographs are a powerful tool to understand the recursive Linking of Parameter and Model. The `` package provides a powerful and interactive function based on HTML and Javascript for Dendropgraphs.

In this example we combined the Models (First Node) wth the parameters Alorithm, Noise, alfa, beta (Second Node)

library("collapsibleTree")

stat.gather<-stat %>%

select(Database,Model,Name1,algorithm,alfa,beta,noise) %>%

gather(Parameter,Name1,algorithm,alfa,beta,noise) %>%

unique %>%

setNames(c("level1","level2","level3","Value")) %>%

mutate(level1 = toupper(level1))

collapsibleTreeSummary(stat.gather,hierarchy=c("level1","level2","level3","Value"),

collapsed=F,

nodeSize="leafCount",root=NULL)Analyze Model

After having an overview on how the Database is structured and how it is performing we need to decide for a Model we’re going to use for the next steps of the evaluation. For Demonstration Purposes we go with the Model1 Model. we can reduce the dimensionality of the stat database by filtering it by the desired model

model<-"Model1"

stat.model<-stat.td %>% filter(Model==model)If we now are interested in testing a few parameters and their impact on the overall performance we can use the same approach as before while testing the complete database. First I would lke to try how the performance of the Model changes based on the noise I added to the Model

Model Parameters

stat1<-stat.model %>% mutate(noise=as.factor(noise))

ggplot(stat.model,aes(algorithm,Value,fill=algorithm,alpha=noise,group=noise))+

theme_bw()+

geom_bar(stat="identity",position="dodge")+

facet_wrap(Statistic~.,scales = "free")+

theme(axis.text.x = element_text(angle = 90, hjust = 1))+

ggtitle(paste0(model,": Performance for ", stat.model$Name1))+

theme(axis.title.x=element_blank(),

axis.text.x=element_blank(),

axis.ticks.x=element_blank())

Correlation

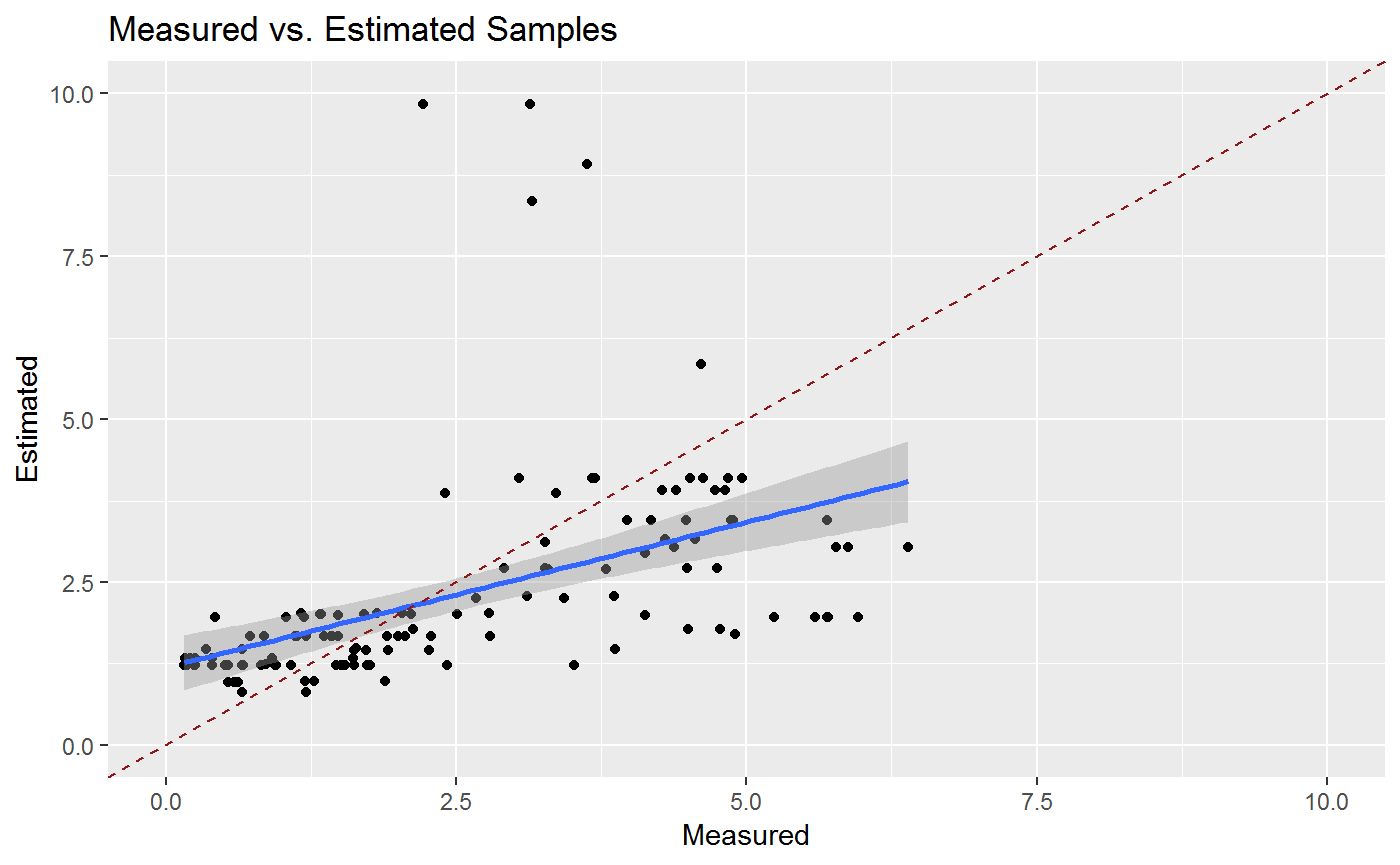

Even though each of the Statistics in this Example has not performed as we would have expected (low R2 and fairly high RMSE values) we can observe the difference between alorithms as well as the impact of the Noise on the Results. First of all we calculate the GGplots for each of the results. GGplots are mathematical rapresentations on how the plot will look like. The Graph is generated as soon as the object in the list is printed. Therefore we add a new column ResultGG to the Tibble containing the generated GGplots. These can be called later depending on the parameters desired.

statGG<-stat %>% mutate(ResultGG=pmap(.,function(...,Results){

g1<-ggplot(Results,aes(Measured,Estimated))+

geom_point()+

geom_smooth(method="lm")+

ggtitle("Measured vs. Estimated Samples")+

xlim(0,10)+ylim(0,10)+

geom_abline(intercept=0,slope=1,col="firebrick4",linetype=2)

return(g1)

}))

statGG## # A tibble: 125 x 36

## Model Database Project PY_ID Date name_class derivar size_data

## <chr> <chr> <chr> <chr> <chr> <chr> <int> <S3: int>

## 1 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 2 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 3 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 4 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 5 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 6 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 7 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 8 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 9 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## 10 Mode~ artmo_1 Mattia1 1 2018~ Full_image 0 1000

## # ... with 115 more rows, and 28 more variables: estadistico <int>,

## # Spectra <list>, LUT <list>, Name1 <chr>, Name2 <chr>, clave <chr>,

## # param_user <list>, algorithm <chr>, normalizar <int>, sim <S3:

## # integer64>, alfa <dbl>, beta <dbl>, noise <dbl>, train <dbl>,

## # ME <dbl>, RMSE <dbl>, RELRMSE <dbl>, MAE <dbl>, R <dbl>, R2 <dbl>,

## # NRMSE <dbl>, TS <dbl>, NSE <dbl>, tictoc <dbl>, Results <list>,

## # Store.ID <int>, Table_Type <chr>, ResultGG <list>For further Testing we use the Exponential Alorithm together eith a noise value of 5 and have a look at the difference between measurend and simulated data.

algorithm.s<-"Exponential"

noise.s<-1.25

gg.select<-statGG %>% filter(algorithm==algorithm.s & noise==noise.s)

gg1<-statGG$ResultGG[[1]]

gg1

And now interactively

library("plotly")

ggplotly(gg1)